Generative AI is transforming how we verify identities and process documents. For legitimate organizations, it offers unprecedented speed and accuracy in document validation. But the same capabilities are now in the hands of fraudsters creating photorealistic passports, IDs, driver’s licenses, invoices, and even birth certificates in minutes.

These aren’t the crude fakes you could once spot with a quick glance. Modern AI forgeries replicate official fonts, layouts, watermarks, and holograms so convincingly they can bypass both human reviewers and outdated automated systems.

The scale of the threat is staggering:

- $200 million in deepfake-related fraud losses were recorded globally in just the first quarter of 2025.

- Scam website creation jumped to 38,000 per day worldwide between May 2024 and April 2025.

- Losses from AI‑powered identity scams are projected to hit $40 billion by 2027.

If your onboarding, booking, or compliance processes rely solely on static document checks, this is your critical turning point. The only viable defense against AI‑generated document fraud is a layered, multi-factor verification system. It combines forensic document analysis with device trust profiling, geolocation signals, behavioral checks, and more.

That’s exactly what we’ll cover in this guide, complete with real-world examples and actionable steps you can take to protect your business today.

Key Takeaways:

- AI speeds up verification and fraud: Generative AI makes document checks faster and more accurate, but also lets criminals create convincing fake documents in minutes.

- Traditional checks fall short: Simple visual or static inspections can’t reliably catch AI‑generated fakes.

- Advanced tools are needed: Layered defenses, like Klippa DocHorizon, help detect forgeries that AI can produce.

- Fakes are easier than ever: Cheap, accessible AI tools make industries such as finance, insurance, and travel high‑risk targets.

- Use multiple verification methods: Combining document forensics, device validation, network checks, and biometrics boosts fraud detection.

- Digital ID is the future: Verified digital identity systems will be essential for compliance and protecting against AI‑powered fraud.

What Is Generative AI Fraud?

Generative AI fraud refers to the malicious use of advanced AI models such as GPT‑5, MidJourney, and GhostGPT to create highly convincing counterfeit content, including documents, images, videos, and even synthetic voices.

When applied to identity verification, these AI systems can produce fake passports, driver’s licenses, national IDs, invoices, and legal documents so realistic they can deceive both trained professionals and outdated Know Your Customer (KYC) systems.

Unlike traditional document forgery, which required specialist equipment, material access, and skilled manual craftsmanship, AI‑driven forgery is:

- Scalable: Fraudsters can automate mass document creation to run scams at industrial scale

- Faster: A convincing fake can now be generated in minutes rather than days or weeks

- Cheaper: All it takes is an internet connection and access to AI image or document generators

The threat does not stop at documents. Fraudsters frequently combine AI‑generated identity papers with:

- Deepfake selfies and liveness videos to bypass biometric verification

- AI‑manipulated audio and video to impersonate executives or claimants

- Synthetic identities that blend stolen personal information with fabricated records to fool even database checks

The result is a new breed of fraud that is harder to detect, easier to execute, and faster to deploy, making prevention and detection an urgent priority for businesses in every sector.

AI‑Generated Document Types Most at Risk

Fraudsters target high‑value documents that unlock access to financial services, travel, government benefits or business transactions.

The most commonly targeted document types include:

Synthetic Identity Documents

Passports, driver’s licenses and national ID cards are prime targets. AI can replicate security features such as fonts, layouts, holograms and watermarks so convincingly that most image‑based verification systems cannot tell the difference.

AI‑Forged Legal Documents and Court Orders

Fraudsters are using generative AI to produce counterfeit contracts, injunctions and other legal papers complete with realistic seals, signatures and formatting. These are often used to trick firms into disclosing sensitive data or transferring funds.

AI‑Generated Invoices and Payment Records

By mimicking the style, logo placement and data fields of genuine vendors, criminals create invoices that can slip past human reviewers and outdated payment approval systems, often resulting in significant financial losses.

Fake Certifications and Diplomas

AI enables the creation of forged diplomas, certificates and professional licenses that closely mimic authentic documents. These fakes are frequently used to gain employment, secure contracts or meet compliance requirements without legitimate credentials.

Traditional physical security features such as holograms, watermarks and specialty inks were designed for in‑person checks and are ineffective when documents are presented as static images during digital onboarding or verification.

Why Traditional Document Verification Falls Short Against AI

Most identity verification systems in use today were designed for a pre‑AI world. They were built to detect crude forgeries or spot obvious signs of tampering. The reality in 2025 is very different.

Generative AI models can now produce forged documents that:

- Perfectly replicate fonts, layouts, and design elements

- Include highly accurate personal data zones and machine‑readable areas

- Simulate realistic photos that blend seamlessly into the document’s design

For many organizations, the assumption has been simple. If the image looks authentic and security features are visible, it is genuine. In the era of AI, that assumption is no longer valid.

As seen in the image below, an industry expert recently demonstrated this by creating an exact AI‑replica of his own passport in under five minutes. His conclusion was blunt. Photo-based KYC is done. Game over.

This is not just about image replication. Fraudsters can now combine forged identity documents with deepfake selfies, synthetic liveness videos and biometric spoofs. That means even systems designed to check a face against a document photo can be fooled.

The accessibility of these tools has removed the barriers that once limited high‑quality document forgery to professional counterfeiters. Today, anyone with a laptop and internet connection can produce convincing fakes capable of bypassing legacy KYC software.

For businesses in finance, travel, e‑commerce, insurance and many other industries, this shift represents a critical change in the risk landscape. Relying solely on document image checks is no longer a safe strategy.

How to Detect AI‑Generated Documents: Step-by-Step Guide

Detecting AI‑generated documents requires more than a quick visual inspection. The most effective approach is a layered process that checks the authenticity of the document from multiple angles at the same time. This makes it significantly harder for fraudsters to bypass verification.

1. Language and Semantic Analysis

Examine the text within the document for subtle inconsistencies or unnatural patterns. AI‑generated text is often overly structured, too perfect or repetitive.

Use AI‑origin detection tools such as GPTZero or OriginalityAI to flag content that may have been created by a language model.

2. Metadata Forensics

Inspect the document’s hidden data such as creation date, author, editing software and device information. Many AI‑generated files have incomplete or missing metadata or timestamps that do not align with expected workflows.

Klippa’s Intelligent Document Processing can extract this metadata instantly and flag suspicious entries.

3. Formatting and Layout Checks

Look for misaligned margins, inconsistent fonts or spacing, and header or footer anomalies. Fraudsters often replicate the overall design but make small mistakes in alignment or typography that automated style-matching tools can detect.

4. Image and Pixel Level Analysis

Use reverse image search to confirm whether images or document photos appear elsewhere online. Pixel‑forensic analysis can reveal cloning artifacts, inconsistent lighting, or grayscale intensity variations that show manipulation.

5. Cross Validation with Trusted Data Sources

Verify the information in the document against authoritative registries or databases. For example, confirm identity details with government records or company information with official corporate databases.

6. Multi-Factor Identity Verification

Document analysis alone is no longer enough. Combine it with other independent checks in a single, orchestrated process. Multi-factor verification can include device trust profiling, location and network pattern analysis, and biometric confirmation such as liveness detection. Performing these checks in parallel increases security and reduces the chance of successful fraud.

Real Life Cases of AI-Generated Document Fraud

Understanding how AI-driven document fraud has already unfolded in the real world helps you recognise how these attacks might target your organisation. Here are notable incidents and tactics fraudsters have used successfully.

AI-Generated Passport in Under Five Minutes

As we’ve seen above, a security professional demonstrated on LinkedIn how he could use an AI model to create an exact digital copy of his own passport in less than five minutes. The end result would likely pass many automated KYC document checks without raising alarms. This case proves that speed and quality are no longer barriers to high-quality forgeries.

Fake Court Orders Used in Fund Release Scams

In Texas, scammers used AI to generate convincing court orders complete with seals, signatures and case references. A law firm nearly released escrow funds before the documents were identified as fraudulent.

Vendor Invoice Deepfake in Australia

A mid-sized Australian law firm received what appeared to be a long-standing vendor’s invoice. AI had been used to replicate the writing style, invoice formatting and reference numbers. The only difference was a small change to the bank account details for payment.

Executive Voice Clone and Document Pairing

In Hong Kong, an employee transferred 25 million USD after participating in a video conference where all the attendees were deepfake versions of company executives. The attackers used matching forged documents to support the narrative.

These real-world cases confirm that generative AI fraud is not a hypothetical risk. It is already targeting businesses across industries and jurisdictions with increasingly sophisticated tactics.

Legal and Compliance Considerations for AI Fraud

The rapid rise of generative AI‑enabled fraud has not gone unnoticed by regulators. Governments and oversight bodies are introducing laws and guidance to address the misuse of AI, especially in identity verification and financial services. Understanding these requirements is essential to staying compliant and avoiding penalties.

EU Artificial Intelligence Act

Adopted in 2024, the EU AI Act sets out strict requirements for high‑risk AI systems. It bans certain manipulative deepfake practices, mandates transparency in AI use, and requires rigorous risk management for identity verification technologies that fall under high‑risk categories.

General Data Protection Regulation (GDPR)

GDPR obligations apply whenever personal data is processed for verification. This includes ensuring lawful processing, obtaining proper consent, preventing data breaches, and using privacy‑preserving technologies such as verified digital identities.

eIDAS 2.0

The updated European eIDAS framework supports secure digital identity wallets, each containing cryptographically signed credentials. Using such systems can help organisations reduce exposure to fraudulent document submissions while staying aligned with EU requirements for cross‑border trust.

US Deepfake and Digital Impersonation Laws

Several states, including Texas, California and Virginia, criminalise the malicious use of deepfakes, particularly when intended for fraud or identity theft. Proposed federal legislation, such as the DEEP FAKES Accountability Act, could soon require AI‑generated media to carry watermarks and impose penalties for malicious use.

FTC Guidance in the United States

The Federal Trade Commission has issued fraud advisories highlighting the risks of AI‑powered deception in financial services, e‑commerce and employment. Recommended measures include layered verification, employee training and closer monitoring of unusual transactions.

Remaining compliant is not simply a matter of meeting industry standards. It is also about proactively closing the security gaps that legislative bodies are increasingly focused on.

Crisis Response Playbook – What to Do If You Suspect AI Fraud

AI‑generated document fraud can unfold quickly, so having a clear response plan is important. Acting methodically can reduce financial losses and prevent further damage.

1. Pause and Assess

Fraudsters often rely on urgency to push victims into hasty decisions. If a request involves moving funds, changing payment instructions or providing sensitive data, take a moment to slow down and verify authenticity.

2. Verify Through a Trusted Channel

Contact the person or organisation that made the request using an independent and previously confirmed method such as an official phone number or an in‑person meeting. Do not rely on the same communication channel through which the suspicious request came.

3. Inspect the Media Carefully

Check for unusual visual or audio artifacts in documents, videos, or voice communications. This can include slight mismatches between spoken words and lip movements, tonal irregularities or image elements that seem too perfect or inconsistent in lighting.

4. Escalate Internally

Report the incident to your organisation’s fraud prevention or security team immediately. The sooner the alert is raised, the faster controls can be activated to stop potential losses.

5. File Official Reports

Notify relevant authorities such as the Federal Trade Commission in the United States, the Internet Crime Complaint Center or local regulators. Detailed reports can help disrupt fraud networks and protect other potential victims.

Having these steps written into your operational policies and staff training ensures everyone knows exactly what to do if fraud is suspected.

Future Proofing Against Generative AI Fraud

Generative AI fraud is evolving quickly, so organisations need strategies that are not just reactive but designed to withstand future threats. Building resilience now will protect both daily operations and long-term customer trust.

Adopt Verified Digital Identities

Shifting away from image-based document submissions towards verified digital identity systems reduces exposure to forged files. Cryptographically signed credentials stored in secure digital wallets allow instant, privacy-focused verification. This means a user can prove eligibility details, such as being over a certain age, without sharing their full date of birth or address.

Integrate Multi-Factor Verification by Design

Make layered verification a permanent part of your onboarding, booking, or transaction approval processes. By checking multiple independent signals at the same time, you make it far harder for AI-generated forgeries to succeed.

Use Predictive AI for Fraud Detection

The same AI technology that creates forgeries can also detect them. Machine learning models trained on fraud patterns, like Klippa’s Document Fraud Detection, can highlight anomalies before they cause losses, even when the forgery closely resembles genuine documents.

Invest in Continuous Monitoring

Fraud techniques will keep changing. Continuous monitoring with AI-driven systems ensures that new patterns and emerging threats are identified quickly, allowing your security measures to adapt without slow manual intervention.

Stay Informed on Regulation and Threat Intelligence

Following legal developments such as the EU AI Act or new deepfake laws helps you align with compliance requirements while staying aware of newly identified scams. Regular interaction with industry threat intelligence networks can give your team early insights into fraud trends.

Future proofing is about more than adopting new tools. It is about embedding a culture of verification, vigilance and agility throughout your organisation.

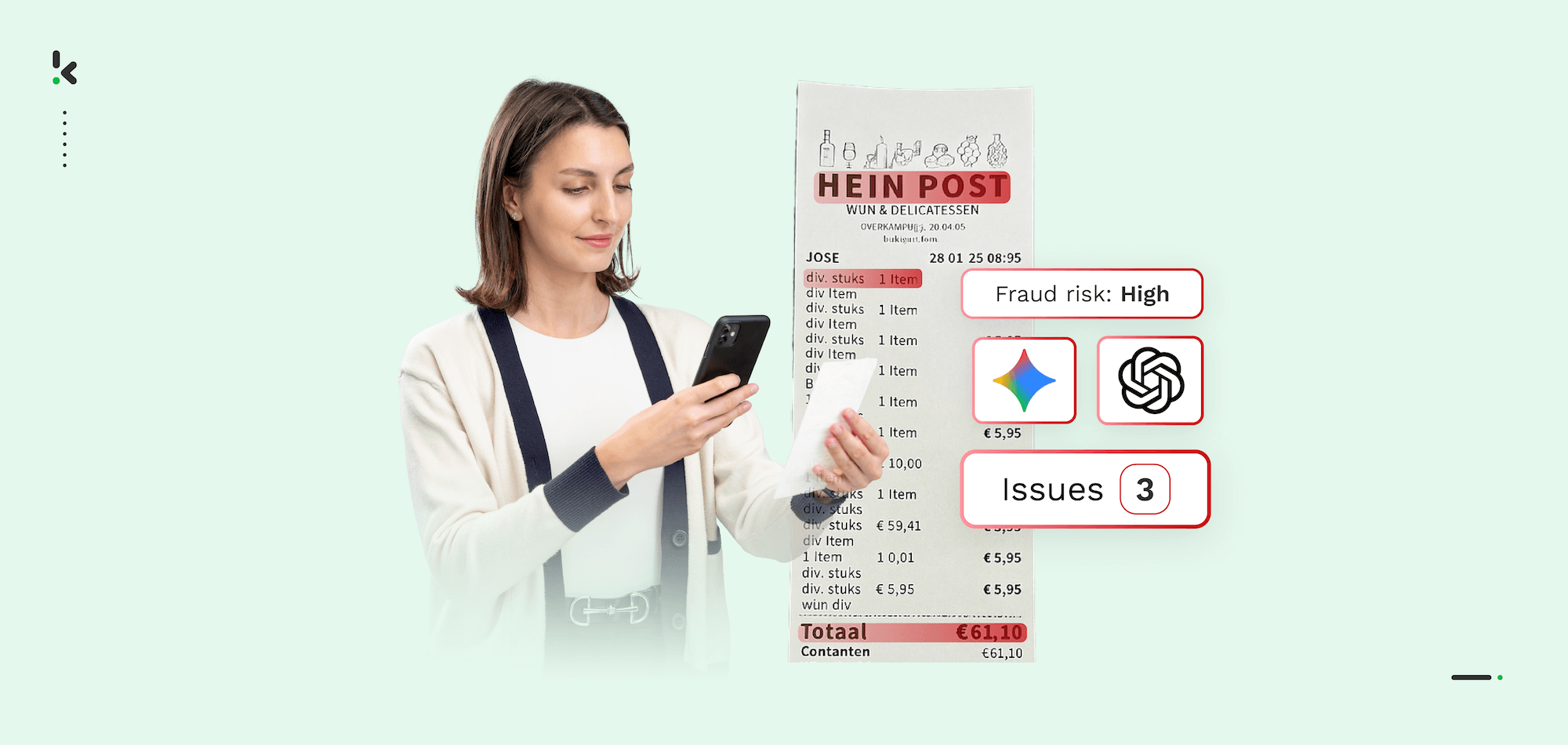

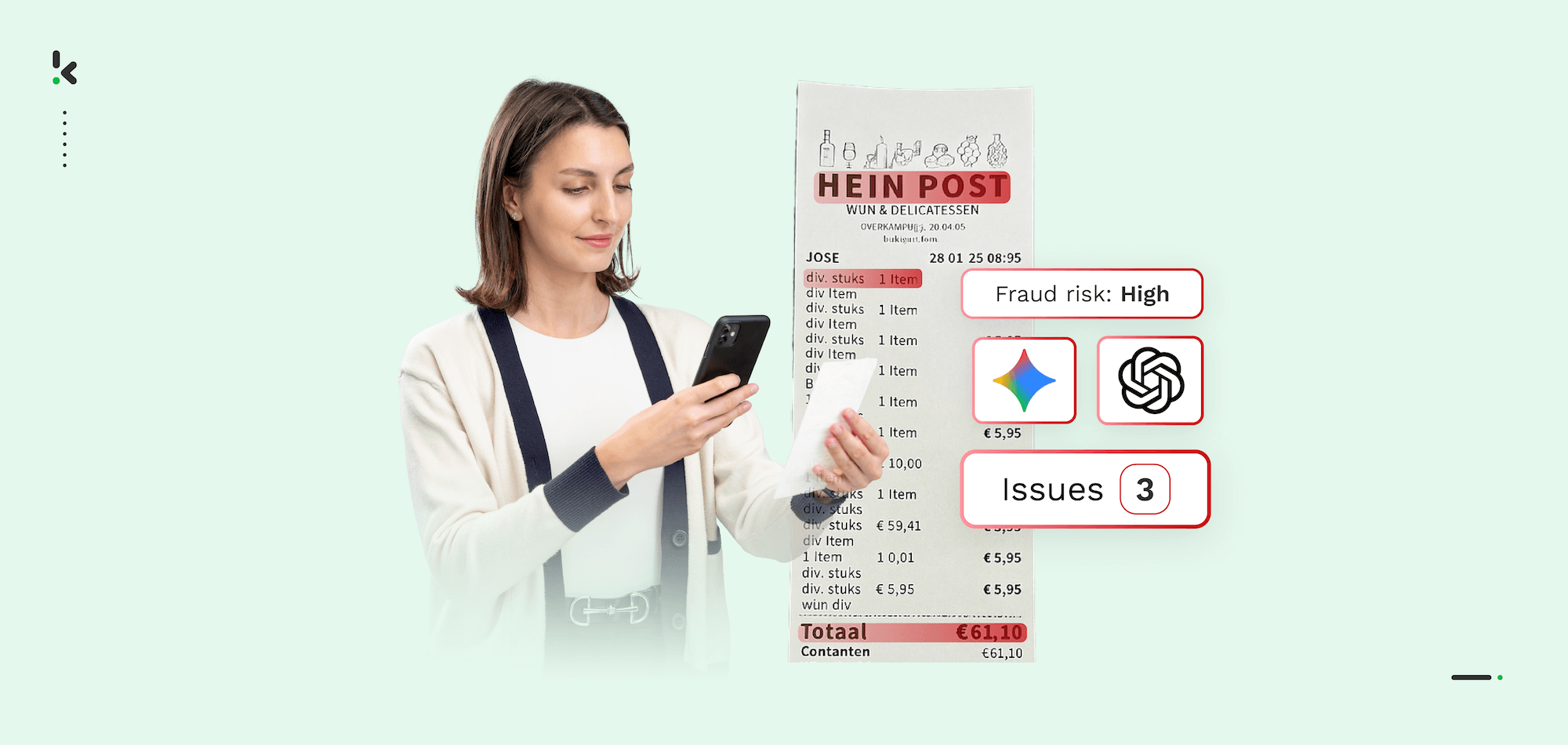

How Klippa Helps Stop AI-Generated Document Fraud

Klippa DocHorizon is an advanced Intelligent Document Processing Platform that leverages technologies like OCR (Optical Character Recognition), AI, and Machine Learning to help you combat document fraud and streamline your document workflows.

- Custom Workflows: Create your unique document workflows by simply connecting any relevant DocHorizon modules: data extraction, capture, classification, conversion, anonymization, verification, and more.

- Fraud Detection: Strengthen fraud detection with any of our automated fraud detection modules (we support all of the methods listed above!) for fool-proof & real-time document fraud detection before it causes your business any harm.

- Robust Identity Verification: Enhance security with our comprehensive identity verification module. This includes age verification, selfie verification, liveness detection, and NFC checks to prevent fraud and ensure user identity authenticity.

- Wide Document Support: Process and detect fraud on any document in all Latin alphabet languages, or customize data fields for extraction for any use cases.

- Integration Capabilities: Easily integrate our solutions directly on the platform, which supports over 50 data integration options, including cloud solutions, email parsing, CRM, ERP, and accounting software.

- Security & Compliance: Stay compliant and secure by default with Klippa, an ISO 27001 certified partner.

FAQ

Passports, driver’s licenses, national ID cards, and birth certificates are the most vulnerable. Generative AI can reproduce their layouts, fonts, and security elements with convincing realism. Klippa’s global template database helps identify subtle discrepancies that can reveal a forgery.

Yes. Modern deepfake tools can manipulate both static selfies and video-based liveness prompts, making them appear authentic to the human eye and simple systems. Klippa counters this with passive biometric checks combined with device trust and network analysis.

Multi-factor verification is highly effective because it requires fraudsters to bypass multiple independent security layers at the same time. Klippa orchestrates document forensics, location and network context checks, device trust analysis, and biometric verification to create robust defense.

Banking and finance, insurance, travel and hospitality, crypto exchanges, gig economy platforms, and government services are among the most exposed. In all these sectors, AI-enabled forgery can lead to significant financial, compliance, and trust impacts.

Klippa DocHorizon can process and verify most documents in under ten seconds. Automated checks analyze metadata, pixel patterns, MRZ validity, and security features simultaneously, providing instant pass or fail results.

Yes. Klippa is ISO 27001 certified and fully compliant with GDPR, CCPA, and other regional privacy and security requirements. This ensures you can strengthen fraud prevention without compromising legal obligations.

Klippa’s platform integrates seamlessly via API or SDK into existing KYC, onboarding, or booking workflows. This allows you to upgrade security without redesigning your operational systems.

AI tools will become faster, cheaper, and more realistic, making traditional document-only checks increasingly ineffective. The long-term solution lies in adopting verified digital identities and keeping fraud detection technology ahead of emerging threats.

Klippa DocHorizon automates multi-factor verification, adapts workflows to your industry and risk profile, and provides detection capabilities that are resistant to AI-generated forgeries. It also supports integration with digital identity wallets for future-proof security.